Record retention as a regulatory obligation

Record retention requirements exist to ensure that firms preserve evidence of material decisions, communications, and supervisory actions. These requirements apply to data-related records just as they apply to traditional books and records.

From a regulatory perspective, retention isn’t about storing everything indefinitely. It’s about ensuring that records relevant to data use, interpretation, and oversight are retained for the appropriate period and can be produced promptly upon request.

Failure to retain records undermines a firm’s ability to demonstrate compliance, regardless of how well decisions were made.

What audit replay means in practice

Audit replay refers to a firm’s ability to reconstruct how a decision, analysis, or communication was produced at a specific point in time. This includes the data used, the assumptions applied, the approvals obtained, and the controls in place.

Regulators expect firms to be able to answer questions such as:

What data was used?

Why was it used?

How was it interpreted or transformed?

Who reviewed or approved its use?

What alternatives or limitations were considered?

Audit replay doesn’t require perfect reconstruction of every interaction. It requires sufficient records to establish a coherent and credible narrative.

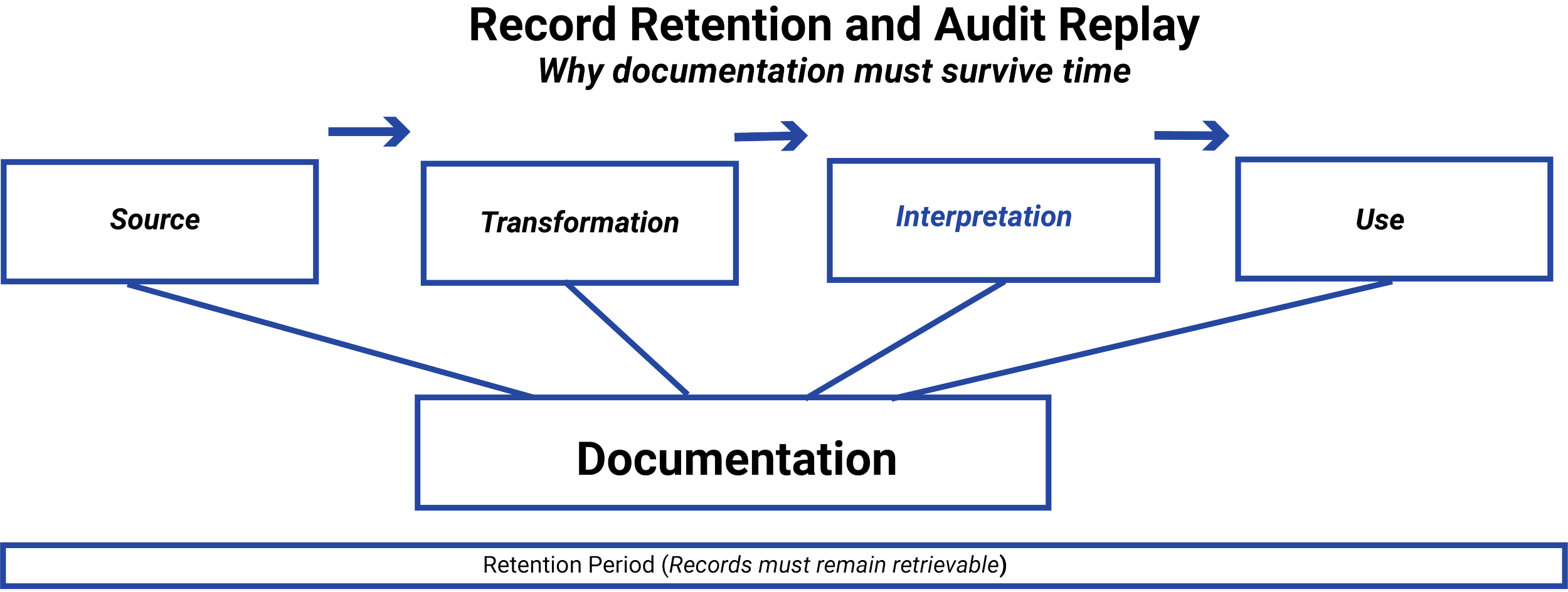

Retention must align with data lifecycle

A common weakness is retaining only final outputs while upstream data decisions and transformations are lost. Regulators assess recordkeeping across the full data lifecycle, not just at endpoints.

Records related to data selection, interpretation, transformation, supervision, and reuse must be retained in a way that allows those steps to be reconstructed. When retention policies are misaligned with how data actually flows through the organization, audit replay becomes difficult or impossible.

Retention should therefore mirror governance, not convenience.

In regulated finance, documentation has limited value if it can’t be retrieved, reconstructed, and reviewed when required. Record retention and audit replay are the mechanisms that allow firms to demonstrate that governance, supervision, and accountability were not only defined but actually applied.

Regulators don’t evaluate records in isolation. They evaluate whether a firm can replay decisions in context, using contemporaneous records that reflect what was known and decided at the time. This lesson explains why retention and replay are essential to defensible data governance.

IN THIS LESSON

Informal systems create replay gaps

Many data-related decisions occur in informal systems such as spreadsheets, ad hoc analyses, emails, or collaboration tools. While these may be operationally efficient, they often fall outside formal retention and archiving frameworks.

When records exist only in fragmented or transient systems, firms may be unable to reproduce decision context during an examination. Regulators generally treat such gaps as governance failures, even when the underlying decisions were reasonable.

Establishing clear expectations for where records must live is essential to audit readiness.

Why this matters before analytics or AI

Analytics and AI systems accelerate decision-making while obscuring intermediate steps. Large language models, in particular, generate outputs through interactions between training data, model versions, system settings, and user prompts. Without deliberate retention and replay design, firms may find themselves unable to explain how automated outputs were generated, even when the outputs themselves are preserved.

Regulators don’t accept system complexity as an excuse for incomplete records. Firms using advanced analytics or AI are often expected to demonstrate stronger retention and replay capabilities, including the ability to reconstruct the context in which an output was produced. This may require retaining prompts, model or version identifiers, configuration settings, approval records, and monitoring results that collectively explain how judgment was applied through the system.

Designing retention and audit replay into data and AI workflows before automation scales is what preserves accountability. It ensures firms can demonstrate not just what an automated system produced, but why it produced it, who approved its use, and how its behavior was supervised over time.

Additional Resources

-

SEC — Books and Records Rule (Rule 204-2)

Establishes that firms must retain records in a manner that allows regulators to reconstruct advisory activities and decisions, reinforcing replay as a core supervisory expectation rather than a storage exercise.FINRA Rule 4511 — General Requirements (Books and Records)

Requires broker-dealers to preserve records in a way that supports review and examination, implicitly requiring contextual reconstruction of decisions, not just final outputs.FINRA Rule 3110 — Supervision (Conceptual Overview)

Emphasizes that supervisory systems must be demonstrable through retained evidence, particularly where automated or high-volume processes are involved.SEC Division of Examinations — Examination Observations and Risk Alerts

Frequently cite inadequate retention and inability to reconstruct decisions as indicators of weak governance, especially in data-driven and automated workflows.

-

COSO — Internal Control Framework (Information & Communication, Monitoring Activities)

Frames record retention as necessary to evidence that controls operated as designed and that oversight can be evaluated after the fact.Basel Committee on Banking Supervision — Risk Data Aggregation and Reporting (BCBS 239)

Reinforces the expectation that firms maintain traceability and reproducibility of decisions derived from data, particularly where aggregation and automation are involved.Enterprise Risk Management Guidance on Auditability

Establishes that systems must be designed so decisions can be reconstructed in context, not inferred from outcomes alone.

-

NIST — AI Risk Management Framework (AI RMF)

Emphasizes the importance of traceability, documentation, and versioning for AI systems, including retention of inputs, configurations, and oversight actions that shape outputs.NIST — Data Governance and Risk Management Concepts

Treats retention and replay as accountability mechanisms that allow organizations to explain how decisions were made over time, particularly in automated environments.Model Risk Management Guidance (SR 11-7 Legacy Framework)

Introduced the expectation that models, assumptions, and supporting data be retained in a manner that allows review, validation, and reconstruction of outcomes.

-

UK Information Commissioner’s Office — Accountability and Auditability in Automated Systems

Discusses why retention of decision context (including inputs and configuration) is critical where automated tools influence outcomes.Industry Commentary on Audit Replay for AI and Analytics

Explores how replay shifts from reproducing outputs to reconstructing decision conditions in probabilistic or evolving systems.Academic Literature on Traceability in Automated Decision Systems

Examines the distinction between outcome replication and decision reconstruction, reinforcing why replay focuses on context rather than determinism.