Supervision applies to data use, not just outputs

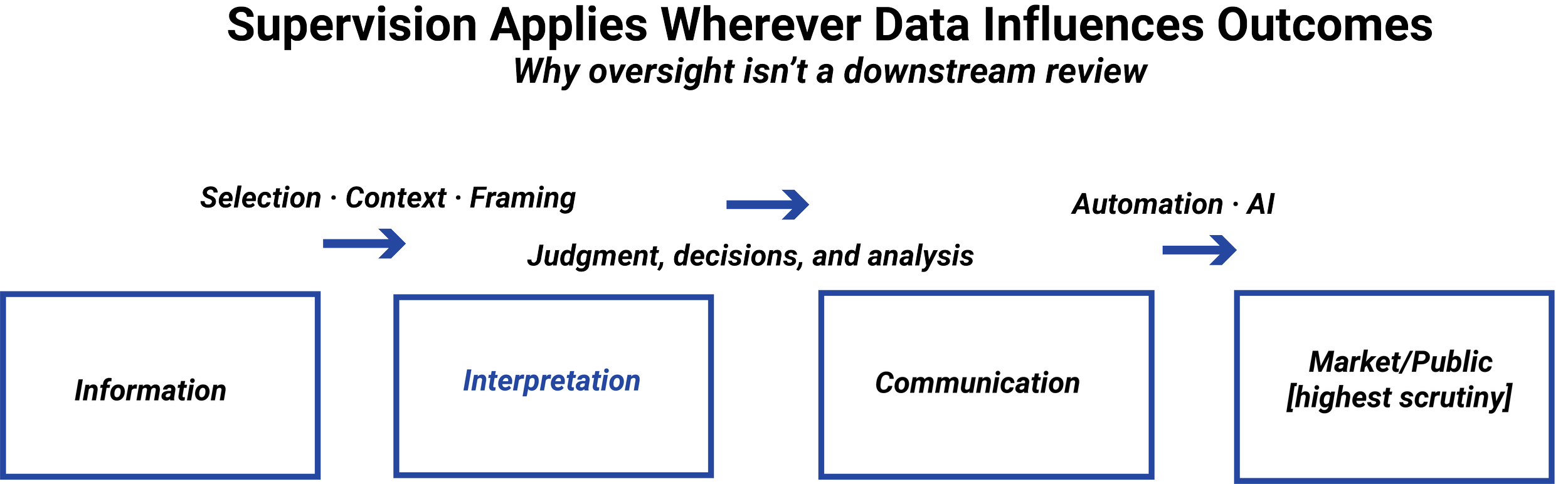

A common misconception is that supervision begins when information is communicated externally or when a formal product is released. In reality, supervisory expectations apply much earlier.

When data is used to inform analysis, segmentation, prioritization, or internal decision-making, regulators expect firms to have controls in place that govern how that data is selected, interpreted, and applied. The fact that a use case is internal or exploratory doesn’t remove the need for oversight if the data meaningfully influences behavior or decisions.

Supervision is therefore tied to use, not visibility.

What regulators mean by supervision

Supervision, in a regulatory context, isn’t synonymous with approval. Instead, it’s a system of accountability that ensures data usage is consistent with firm policies, regulatory obligations, and stated purposes.

Effective supervision includes clearly defined roles, documented review processes, escalation paths, and evidence that oversight actually occurred. It also requires that supervisors understand the nature of the data being used and the implications of how it’s interpreted.

Delegating data use to systems, vendors, or junior staff doesn’t delegate responsibility. Regulators expect supervision to follow the data wherever it is applied.

Clear ownership of supervisory responsibility

One of the most common weaknesses regulators identify is unclear supervisory ownership. Data may pass through multiple teams—operations, analytics, marketing, compliance—without a single function clearly accountable for how it’s used in the end.

Effective supervisory structures assign responsibility explicitly. Someone must be able to explain why a particular dataset was used in a particular way, who reviewed that use, and what controls governed the process. When responsibility is diffused, accountability breaks down.

Supervision must be assignable to people and roles, not just documented in policy.

In regulated financial organizations, supervision isn’t optional and isn’t abstract. It’s the mechanism regulators rely on to ensure that data is used consistently, responsibly, and within permitted boundaries.

Many firms treat data governance as a policy exercise and supervision as a downstream review function. Regulators don’t make that distinction. From their perspective, supervision must be embedded wherever data influences decisions, communications, or outcomes.

This lesson explains what regulators expect when they evaluate how data is supervised, and why informal or assumed oversight is rarely sufficient.

IN THIS LESSON

Documentation as evidence of supervision

From a regulatory standpoint, supervision must be demonstrable. Policies and intentions are not sufficient on their own. Regulators look for documentation that shows how supervision was applied in practice.

This may include review records, approval logs, documented rationale for data use, and records of exceptions or escalations. When documentation is missing, regulators often assume that supervision didn’t occur, even if informal review took place.

You’ll hear this stated multiple times in this course: Documentation isn’t administrative overhead; it’s the evidence that supervision existed.

Why this matters before analytics or AI

Analytics and AI systems increase both the speed and scale of data use. Without clear supervisory expectations established upfront, these systems can propagate unsupervised decisions rapidly and invisibly.

When regulators review AI-assisted or automated workflows, they don’t relax supervisory expectations. Instead, they expect firms to demonstrate how supervision was designed into the process, how exceptions are handled, and how accountability is maintained.

Establishing supervisory expectations at the data level is what allows advanced tools to be deployed without creating unmanageable risk.

Additional Resources

-

FINRA — Rule 3110: Supervision

Establishes that firms must maintain supervisory systems reasonably designed to oversee how information is used across activities, including analytics, communications, and internal decision-making.SEC — Books and Records Rule (Rule 204-2)

Reinforces that supervision must be demonstrable through records, not inferred from outcomes, requiring oversight to exist where data influences decisions.SEC — Division of Examinations Guidance on Governance and Controls

Examination materials consistently evaluate whether oversight is embedded upstream, rather than applied only after issues surface.

-

COSO — Internal Control Framework (Control Environment and Monitoring Activities)

Emphasizes that supervision is an operational control embedded in daily processes, not a periodic or retrospective review.Office of the Comptroller of the Currency — Heightened Standards and Governance Expectations

Reinforces the expectation that supervisory accountability be clearly assigned and integrated into business processes.

-

Basel Committee on Banking Supervision — Governance and Risk Management Principles

Highlights that accountability for information use must be clearly owned, particularly where data supports risk decisions or external communications.Organisation for Economic Co-operation and Development — Principles on Accountability and Oversight

Provides a policy-level articulation of supervision as a mechanism for assigning responsibility and controlling downstream risk.

-

Enterprise Supervision Models — Embedded vs. Post-Hoc Review

Industry governance literature consistently identifies embedded supervision as more effective than reliance on downstream review or exception handling.Operational Risk Management Literature — Control Design and Oversight

Discusses how weak supervisory design often precedes broader governance and compliance failures.